Graphics and Technologies

The Board

The board uses a simplified coordinate system with grid cells being represented by integer X and Y values, which can be converted to and from world space coordinates (X, Y, Z). Wall pieces follow a special rule, where the wall object is offset from the center of the cell in order to stick to the space between cells. Objects can also have their rotation defined by four possible directions (North, South, East, West). Instead of using traditional colliders, board objects use simplified “logical colliders”: each grid cell can have any combination of five collision tags depending on the objects present on it: center, north, south, east, west. Two objects with the same collision tags cannot occupy the same cell. When moving players around the board, a pathfinding algorithm can also check if there is an available path between the origin and the destination. The algorithm used is a simple breadth-first pathfinding.

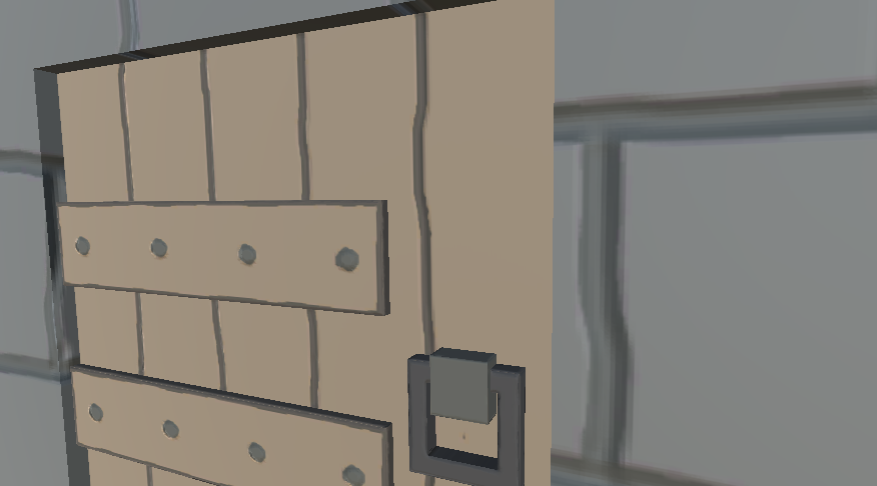

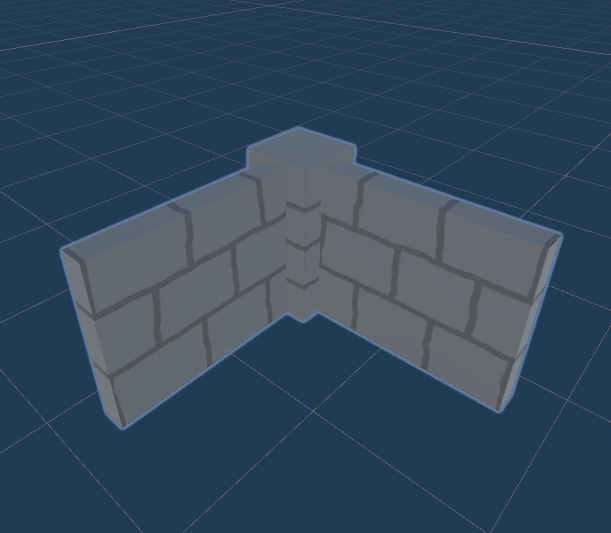

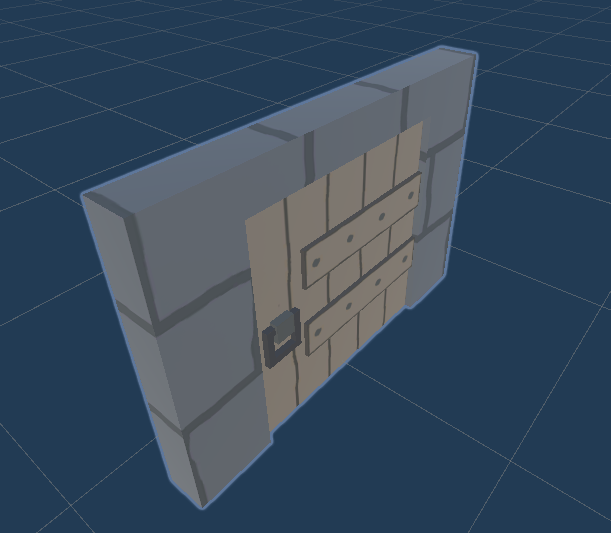

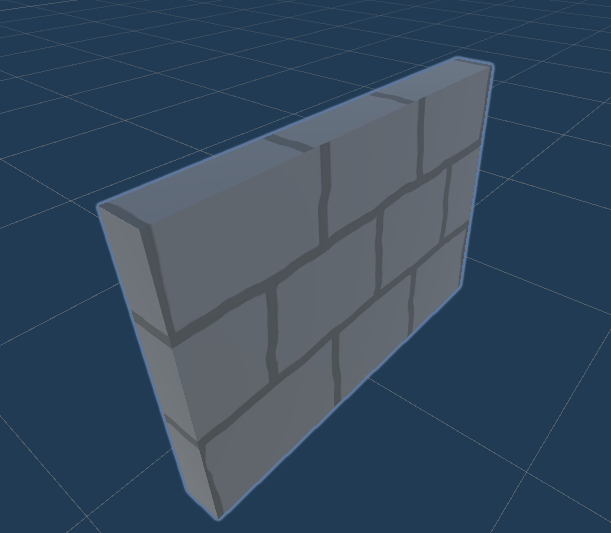

World models were created in Blender, with the textures being hand-painted in GIMP. Later on the project, normal maps were also created by sculpting ridges and scraping edges on a high-poly copy of the world models, using the textures as a guideline. The details from the hi-poly model can then be “baked” into a normal map for the low-poly model, retaining most of the detail without the performance issues of using extremely complex models.

Unity

We builded our application starting from the game engine Unity. This was an obvious choice for us since everyone had already at least a little experience with it.

Mirror Networking

Making a multiplayer game was a crucial part of this project, as we intended it to allow people to meet online. Since no one of us had experience in netcoding we chose to use a pre-existing API to simplify our work.

Mirror was a great choice because it offers high-level functions and it is well documented. We were able to create a shared environment, where every player can interact with it, and the changes are reflected on the rest of the group that is connected to the same “room”. It was also quite easy to setup a public server, so that multiple people can connect over the internet.

However, Mirror has its own flaws as well: being a predefined tool, ready to use, means that it is especially good to make a particular job, but it is a lot harder to make things work in an unusual way. In this case, it is perfect for creating a MMO game, where every player has the same role as each other. However we wanted to define two very distinct roles: Master and Player. Although we managed to differentiate them, this was very hard to achieve.

Main Menu Scene, UI and Particle Effects

For better management purposes, the whole project is separated into two scenes, the main menu scene and the game scene. The main menu scene is built from a combination of a free pixel-style asset Voxy Legends and our own world models, which is rendered in real-time with animated particle effects and baked global illumination with soft shadows to create a more realistic dungeon atmosphere.

To unify the pixel-style throughout the game, all UIs are pixel arts drawn using Photoshop or modified from assets SimplePixelUI, with implemented animation to support smooth hovering/popping up/disappearing effects. The particle effects for fire are also based on a pixelated material, created through accumulating four separated particle systems which represent the outer flame, inner flame, glow, and the sparks separately.

From the main menu to the game scene, users' choice to be a master/player, and the IP address to connect need to be transferred together with the scene. To achieve this, Unity PlayerPrefs is used to store these data locally.

Face Tracking

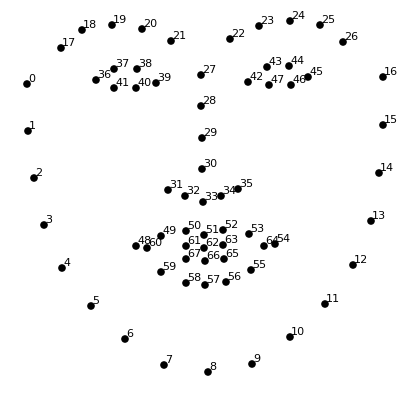

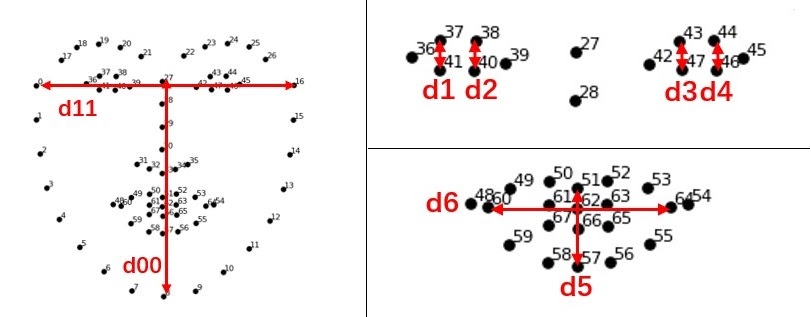

The face tracking system starts with a face landmarks tracking procedure. For later estimation of head position, orientation and the facial expression features, 68 face landmarks are tracked using Dlib and OpenCV library.

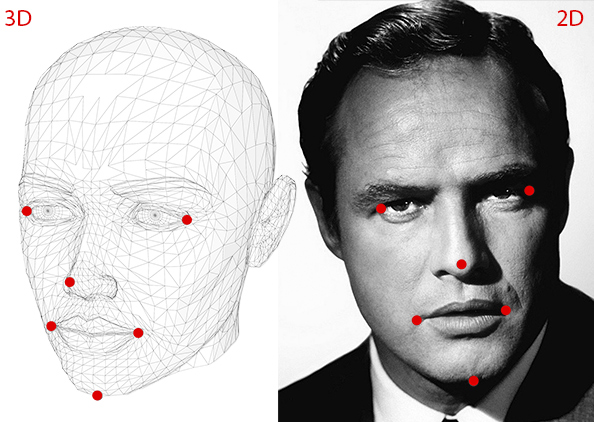

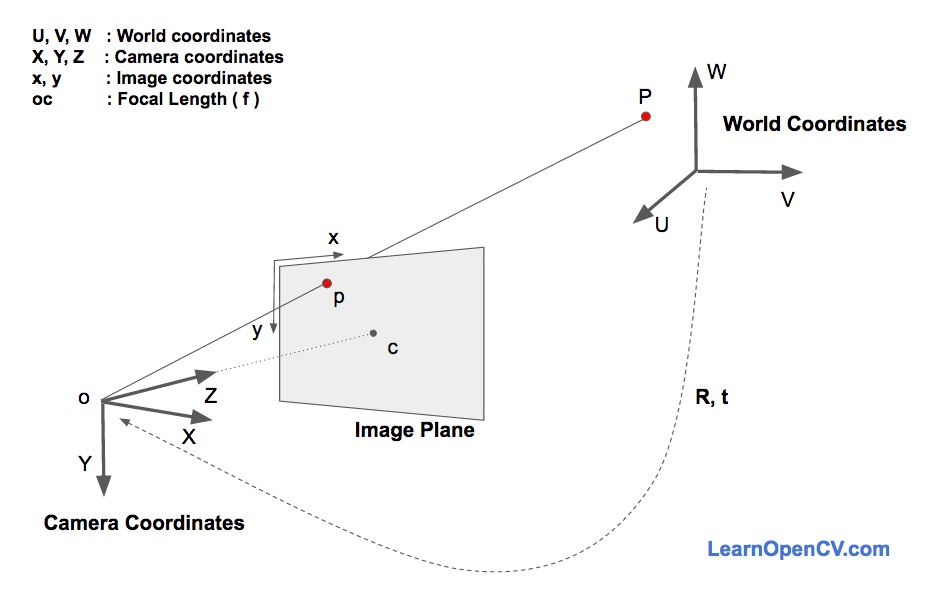

Based on the tracked landmarks, the orientation and position of the head in a 3D space can be estimated using a monocular camera through PnP (Perspective-n-Point) measurement.

In general, the idea is to transform the 3D points in world coordinates to 3D points in camera coordinates. The 3D points in camera coordinates can be projected onto the image plane using the intrinsic parameters of the camera ( focal length, optical center etc.).

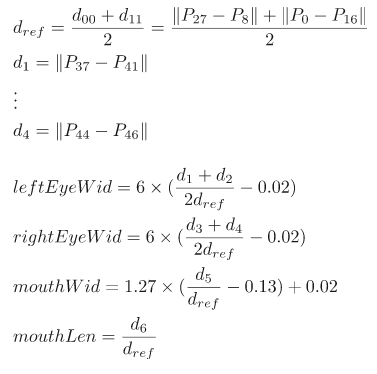

The facial expression features extraction especially focused on tracking the openness of eyes and mouth, together with the distance between eyes and eyebrows. The core algorithm here is Eye-Aspect-Ratio which is the mostly used feature for detecting eye-blinking.

This provides the primary base for the openness detection for both mouth and eyes. Based on it, a rotational invariance is added into computation by defining a reference distance which is insensitive to the rotation.

Before mapping the face tracking data with the actual model, it can be observed that the detection results are quite noisy and unstable. Therefore a Kalman filter and a Median filter are implemented to reduce noise in image processing and stabilize the model animation. Kalman filter is used during landmarks tracking so that the history information is used rather than just relying on the detected location from the current frame alone. The Median filter is applied for further optimization by running through the signal entry by entry, replacing each entry with the median of neighboring entries in size of 5 frames.

Facial Animations

The animation style used to animate the face is called Morph target animation. This is done by having a default neutral face and a bunch of premade expressions that the face can morph into. These premade expressions are called blend shapes and we have created one for each moveable facial feature. The blend shapes allows us to interpolate between the neutral expression and the modified ones and since this can be done for all blend shapes at the same time it creates a large range of possible facial expressions.